CUDA Toolkit Explained: How GPU Computing Accelerates AI Workload

As artificial intelligence, machine learning, and data-intensive applications continue to scale, traditional CPU-based computing is no longer enough. Companies building modern software need faster processing, better performance, and efficient ways to handle massive workloads.

That’s where the CUDA Toolkit comes in. Developed by NVIDIA, CUDA has become a foundational technology for GPU-accelerated computing, powering everything from machine learning models to high-performance computing and real-time data processing.

In this article, we’ll break down what the CUDA Toolkit is, why it’s relevant today, and why it has become essential for companies working with AI and advanced computing workloads.

What is the CUDA Toolkit?

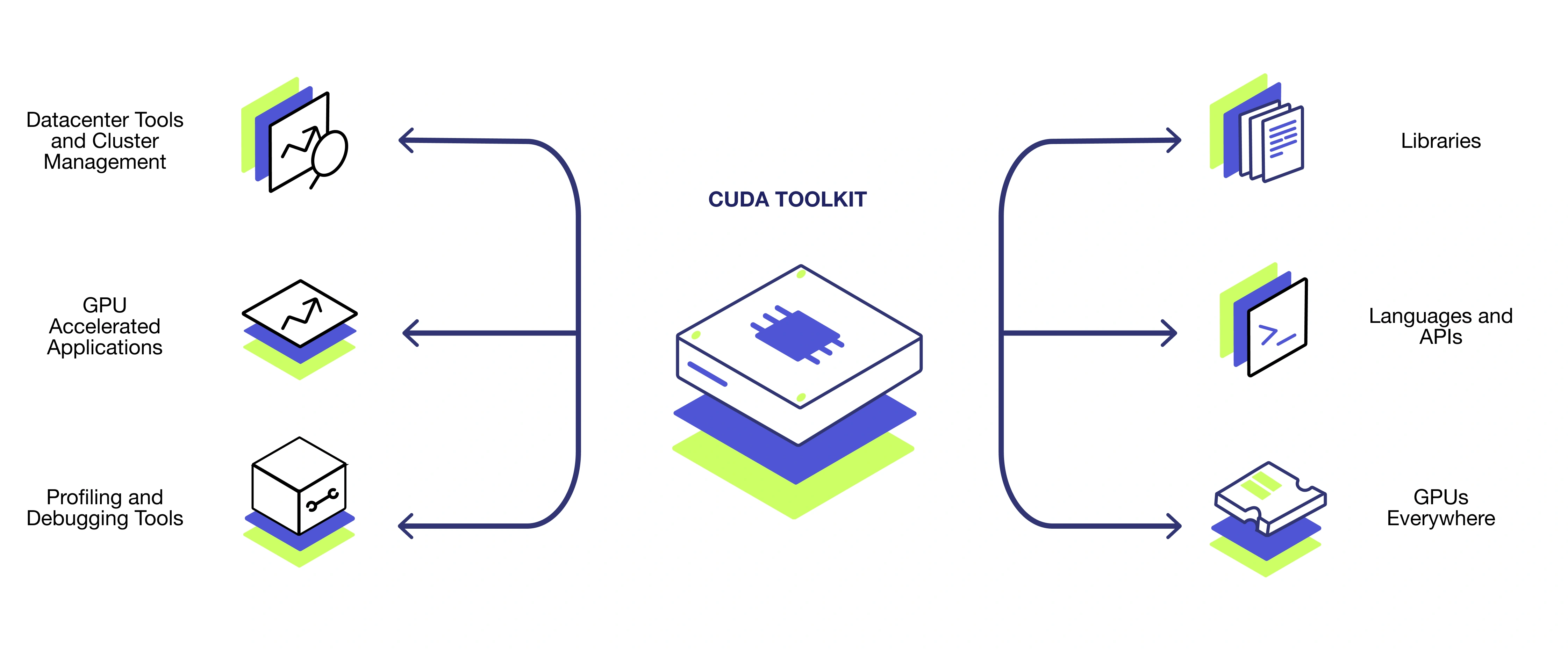

The CUDA Toolkit is a software development platform that allows developers to use NVIDIA GPUs for general-purpose computing.

Instead of relying only on CPUs, CUDA enables GPU accelerated computing, unlocking massive parallel processing power that dramatically improves performance for compute-heavy tasks.

The toolkit includes:

- CUDA libraries and APIs

- Compilers for CUDA software

- Debugging and performance analysis tools

- Drivers and runtime components

With tools like this developers can build, optimize, and deploy applications that fully leverage modern NVIDIA GPUs. In short, CUDA turns GPUs into programmable engines for high-performance computing.

How CUDA software enables GPU accelerated computing

At its core, CUDA software allows developers to write programs that execute thousands of operations simultaneously on a GPU. This approach is especially powerful for:

- Matrix operations

- Large-scale data processing

- Image and video processing

- Scientific simulations

By distributing workloads across thousands of GPU cores, CUDA dramatically reduces execution time compared to CPU-only processing. This is why CUDA is widely used in deep learning frameworks, AI inference engines, data analytics pipelines, or even high-performance computing environments.

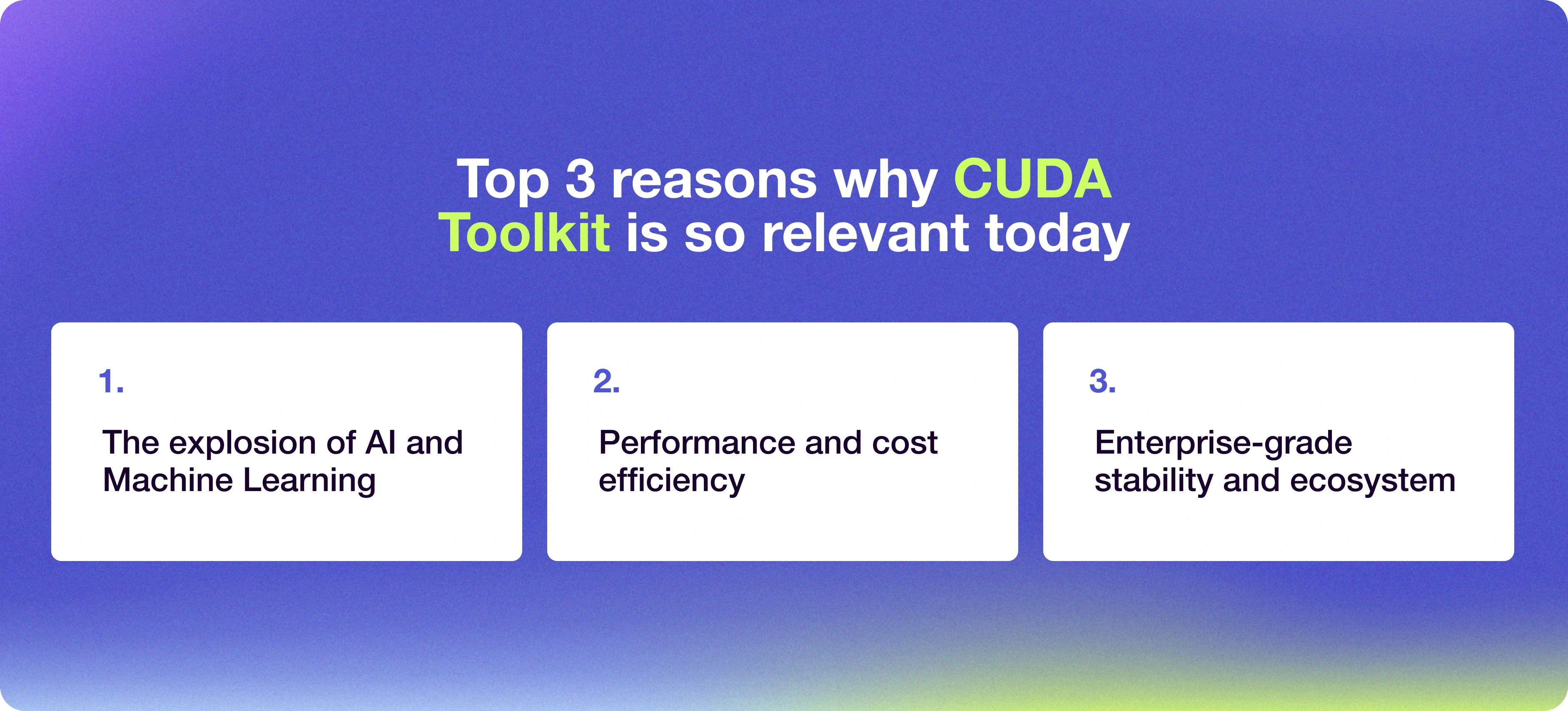

Why CUDA is so relevant today

1. The explosion of AI and Machine Learning

Modern AI models demand enormous computational power. Training and running these models without GPUs is often impractical. CUDA has become the standard for GPU computing for machine learning, enabling:

- Faster model training

- Scalable inference workloads

- Efficient handling of large datasets

Most popular ML frameworks (like TensorFlow and PyTorch) are built to work directly with NVIDIA CUDA, making it a critical dependency in AI stacks.

2. Performance and cost efficiency

From a business perspective, CUDA isn’t just about speed, it’s about efficiency.

GPU-accelerated workloads can:

- Reduce processing time from days to hours

- Lower infrastructure costs by optimizing resource usage

- Improve time-to-market for AI-powered products

For companies investing in AI, CUDA helps turn expensive hardware into a competitive advantage.

3. Enterprise-grade stability and ecosystem

CUDA has been evolving for over a decade, and its ecosystem is mature and well-supported. With each release (including CUDA Toolkit 13.1), the technology introduces:

- Performance optimizations

- Better compatibility with modern hardware

- Enhanced security and debugging tools

This makes CUDA a reliable choice for production-grade systems.

Installing and managing the CUDA Toolkit

Getting started with CUDA involves a few essential steps.

Instalation Process

To use CUDA, developers must install CUDA Toolkit on their system. This typically includes:

- GPU drivers

- CUDA runtime

- Development libraries

The CUDA Toolkit installer varies by operating system (Linux, Windows) and GPU architecture. A proper CUDA Toolkit installation ensures compatibility between your GPU, drivers, and software stack.

Check CUDA Toolkit version

After setup, it’s important to check CUDA Toolkit version to ensure compatibility with your frameworks and drivers.

Keeping CUDA versions aligned avoids runtime errors and performance issues, especially in production environments.

Why CUDA matters for companies building AI today

For companies adopting AI, CUDA is no longer optional, it’s foundational.

CUDA enables companies to build scalable AI infrastructure, accelerate experimentation and iteration, and deploy production-ready machine learning systems that can support real-world, high-demand workloads.

Whether you’re building recommendation engines, computer vision systems, or large-scale data platforms, CUDA makes it possible to move from proof of concept to real-world impact.

As AI workloads continue to grow in complexity, GPU-accelerated computing powered by CUDA will remain a critical pillar of modern software engineering.

Final thoughts

The CUDA Toolkit sits at the intersection of performance, scalability, and innovation, and has become a foundational layer for modern AI development.

CUDA plays a critical role in how companies build, optimize, and scale compute-intensive systems, particularly in machine learning and data-driven applications that rely on GPU acceleration.

For organizations investing in AI today, and planning for what comes next, leveraging CUDA is a strategic advantage, not just a technical decision. At Devlane, we help companies translate that advantage into real business outcomes by building and scaling AI-ready engineering teams with hands-on experience in GPU-accelerated computing.

Whether you’re looking to implement CUDA-based solutions, optimize existing workloads, or hire an entire team to drive your AI initiatives forward, we partner with you to make it happen.

Other Blog Posts

CUDA Toolkit Explained: How GPU Computing Accelerates AI Workload

What 2025 meant for Devlane: A year of growth and innovation